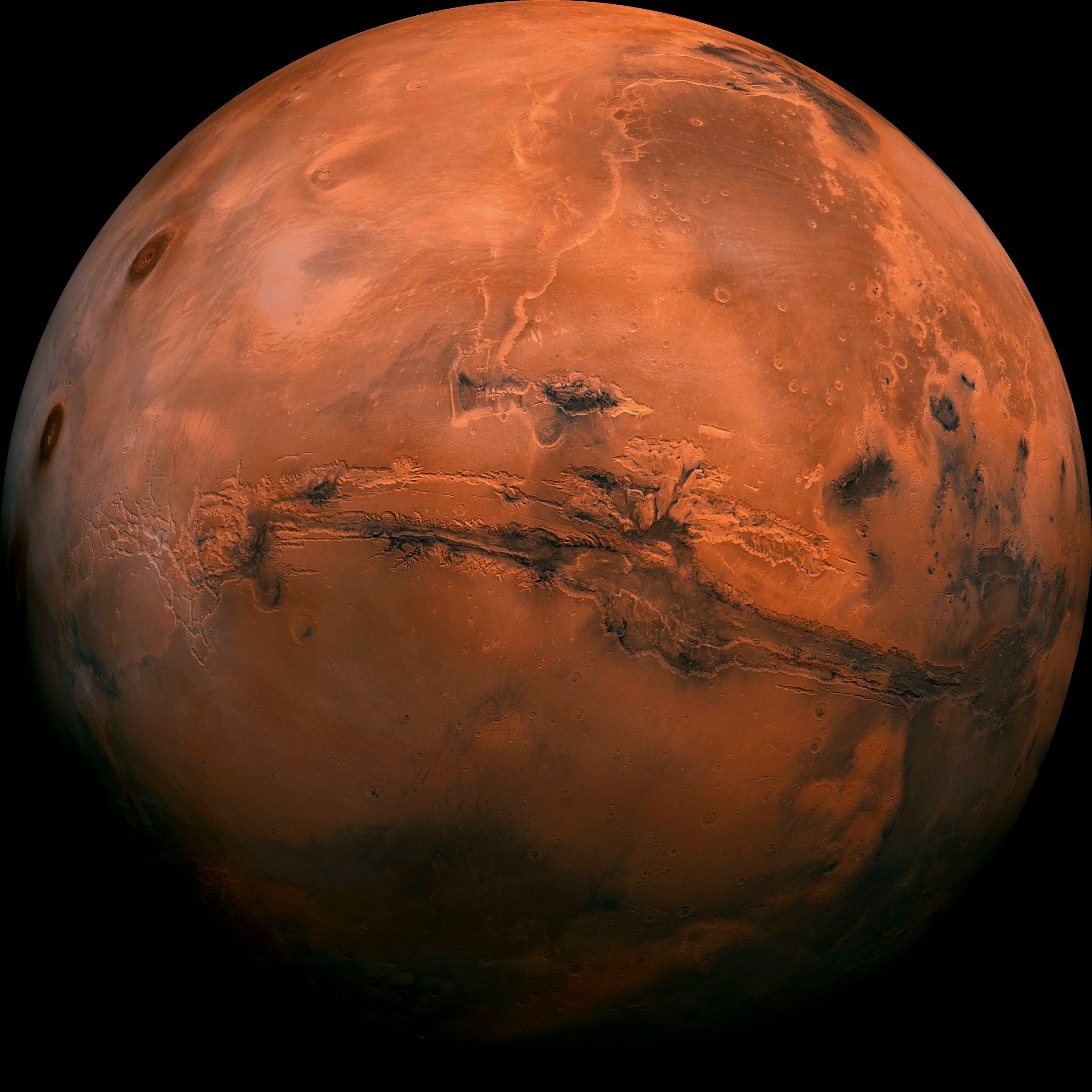

Powered by curiosity AI and using modified rapid prototyping and industrial manufacturing techniques, a large-scale industrial robotic arm creates and poetically explores a full-color, full-scale (144 million km²), three-dimensional twin of Mars on Earth.

Magnaforma

Led by artist-engineer Shawn Brixey and research faculty in The School of the Arts, College of Engineering, and College of Health Professions at Virginia Commonwealth University, VCU collaborators—in collaboration with computer science faculty and students from Louisiana State University (LSU)—have created Magnaforma, a large-scale, award-winning, multi-university arts research exhibition designed to harness the power and precision of a large industrial robotic arm whose movement over time will physically create the entire surface topography of Mars here on Earth.

Using modified, large-scale, rapid prototyping and industrial manufacturing techniques, the robot will create a (144 million km²) full-color, one-to-one scale, three-dimensional mirror twin of the planet. However, rather than simply rendering the planet, the robotic arm - empowered by curiosity AI - is given creative freedom to invent lyrical movement and performative gestures that allow it to travel the terrain on its own, and for us to dwell in the meditative space of discovery with it.

Data from NASA's twenty-three missions to Mars are used to create a complex, three-dimensional environment where the robot can play, perform, and explore.

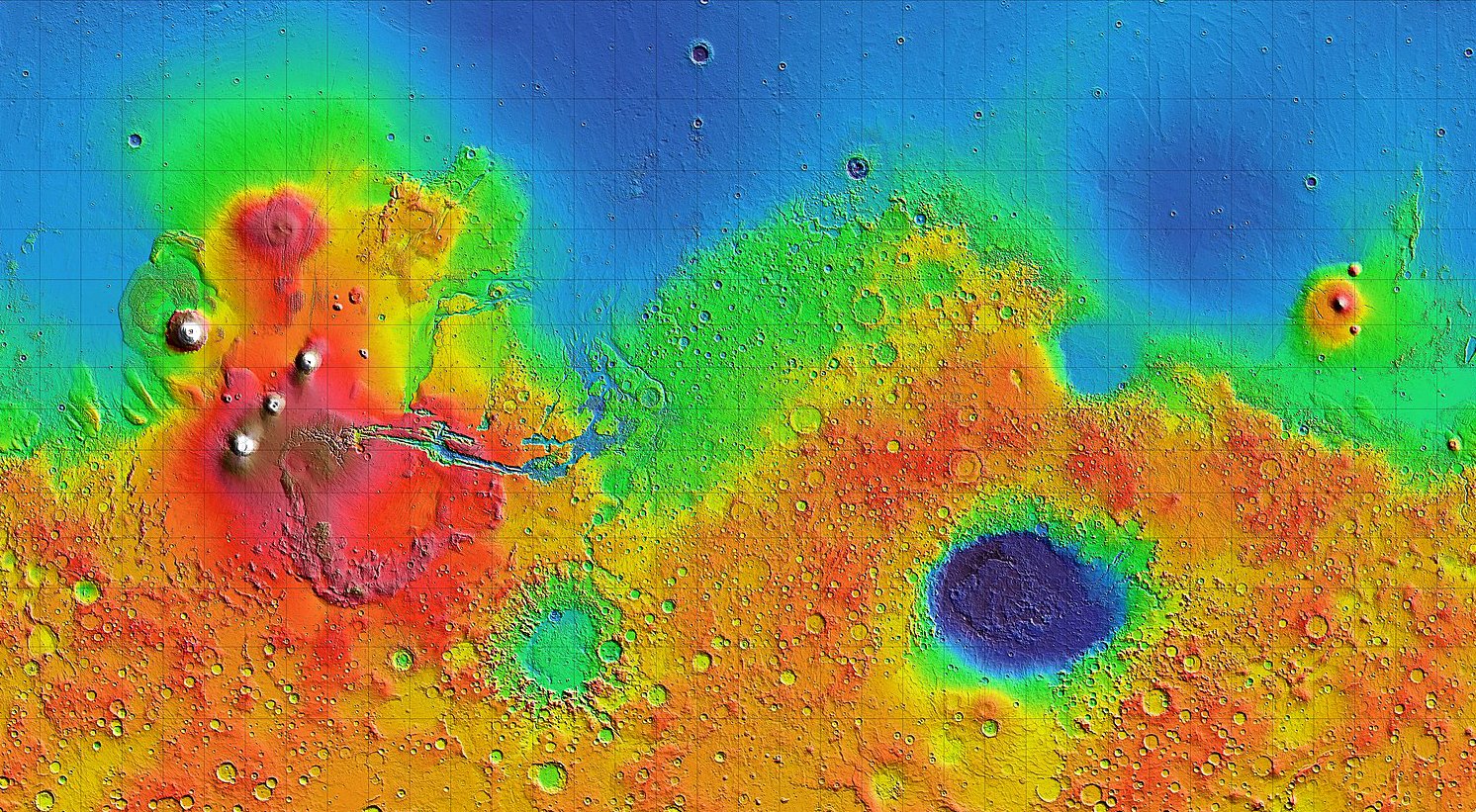

Imaging and terrain data are acquired from multiple sources, including the High Resolution Imaging Science Experiment (HiRISE) onboard NASA's Mars Reconnaissance Orbiter (MRO). The digital terrain data is used to create a contiguous planetary surface grid with a spatial resolution of 1 km.

The robotic arm carries a planchette - a large, square, open illuminated frame - that is affixed to the arm and outfitted with thousands of programmable LED’s. Similar to rapidly changing frames in a film, Magnaforma's LEDs construct a planet-sized object by spatially mirroring the three-dimensional surface features of surrounding terrain and accurately reproducing its colors. This process results in a stunning, abstract, mirage-like window that reflects the robot's place and time on the planet.

Gliding across the planet's surface like a snowboarder, the planchette's LEDs traverse a three-dimensional volume of layered colors derived from NASA's Mars surface, horizon, and sky data. From the viewer's perspective, the robot and its luminous frame form a mesmerizing, brightly colored portal reminiscent of James Turrell's work, floating with the playful abandon of a child's hand in the wind outside the window of a moving.

This physical encounter is not didactic or demonstrative; rather, it evokes deep proprioceptive sensations, emerging as an unsettling yet sublime experience. The juxtaposition of its physical size and conceptual scale defies logic: an unassuming industrial robot quietly constructs a full-scale celestial body before our eyes, yet its actions are anthropomorphically familiar, majestic, and ephemeral – like a live performance.

The image above illustrates an industrial robot prototype, learning to use curiosity AI developed for Magnaforma, allowing it to more imaginatively interpret NASA data that informs movement of its illuminated planchette in space. As the robot learns, it seeks to emulate more human like behaviors, expressing creative patterns and exploring new situations by incorporating practices associated with curiosity into its algorithmic models. This feedback loop motivates the robot and is expressed in the dramatically visible and uniquely three dimensional surface topography that it has created from the data of the planet surrounding it. Developing its own rhythm the algorithmic models might at times amplify exploration by reinforcing behavior that covers more territory and yields new information about its environment. Exploration and the drive for new information can periodically blend and shift with the system prioritizing focus on more virtuosic movement, gestural expression and interpretive phrases. Algorithmic behaviors supporting the ability to explore, express, linger, remember, and forget begin to create actions that seem anthropomorphically familiar, majestic and ephemeral – much like those in a live performance.

The project is also designed as a laboratory for community engagement with other creators. Museum maker days for example could offer Magnaforma’s unique environment to local dance companies interested in exploring movement on remote landscapes and distant horizons, or to amateur historians discussing notions of presence and absence, converting photographs of loved ones lost to COVID into 3D images that the robot searches for their likeness in the landscape of a distant world.

The robot is programmed utilizing a novel Unity-based animation learning environment developed at VCU for this project. Accessible via mixed reality headset or smartphone, Magnaforma collaborators see a full-size, virtual twin robot with movements overlaid in real-time on its real-world counterpart.

Using gestural arm and hand movements (like moving a mouse in space rather than on a flat desk) the system allows one to create and edit complex choreographed movements of the robot in real-time, record them and play them back in the real-world. NASA data repurposed for this installation allows the robot - using AI - to imaginatively interpret space creating a highly accurate but fantastically abstracted landscape of Mars.

Magnaforma can be seen through the lens of many movements and experimental arts lineages that inform media arts practice. Artistically, it honors and seeks to expand the meditative and romantic monumentality of Robert Smithson, deepen the perceptual psychology explored by James Turrell, build on the algorithmic spaces of Maya Lin, and the computational choreography of William Forsythe.

The project is equally indebted to the disciplines of science and engineering. Though creative problem solving is different between the fields of inquiry, Magnaforma weaves together a unique collaboration that shares a sense of awe, wonder, and critical engagement with the world, the future, and ourselves in it. The discovery, technical challenges, and invention required to realize the project provide a unique environment of intellectual communion for explorers at the intersection of art and technology.

The success of our collaboration and our aim to broaden access and creative involvement align with the NEA's objectives for media arts projects. These goals include integrating arts with technology, bridging digital divides, fostering digital imagination and literacy, and enhancing computer science engagement within local communities and the arts.

Bucknell University, in Lewisburg, Pennsylvania and Louisiana State University, in Baton Rouge will host the inaugural exhibitions of Magnaforma. Each exhibition includes public programs, workshops and opportunities for community members to explore the creative coding environment, robotic equipment and museum context to shape their own ideas that will be presented/performed as a collaborative part of the project through Open-Access weekends and formal campus class curriculum.

Scholarly lectures on robotics and AI, creator days for university students, guided tours for K-12 students, and a color catalog are included. Each museum hosts approximately 25,000 visitors annually and engages in significant outreach to underserved communities. The campuses ensure that all exhibitions are accessible, laid out to accommodate wheelchairs, with relevant printed in accessible fonts and sizes, etc.